Which Of The Following Is Not True About Deep Learning

Breaking News Today

Mar 25, 2025 · 7 min read

Table of Contents

Which of the Following is NOT True About Deep Learning? Debunking Common Myths

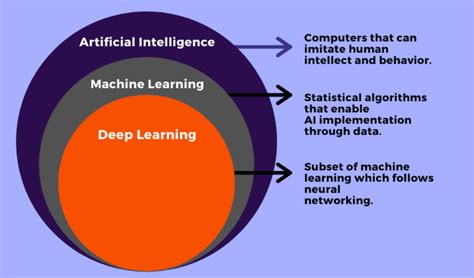

Deep learning, a subfield of machine learning, has revolutionized numerous industries, from image recognition to natural language processing. Its impressive capabilities often lead to misconceptions and misunderstandings. This article aims to debunk common myths surrounding deep learning, clarifying what it can do and, importantly, what it cannot do. We'll examine several statements about deep learning and determine which are inaccurate, exploring the nuances of this powerful technology.

Myth 1: Deep Learning Requires Massive Datasets for All Tasks

While it's true that deep learning models often benefit from large datasets, the statement that all deep learning tasks necessitate massive amounts of data is false. The data requirements are heavily dependent on the complexity of the task and the architecture of the model.

The Reality: Data Efficiency in Deep Learning

Recent advancements have focused on improving data efficiency in deep learning. Techniques like transfer learning, where a pre-trained model on a large dataset is fine-tuned for a smaller, specific dataset, significantly reduce the need for massive data collection. Furthermore, techniques like data augmentation, which artificially expands the training dataset by creating modified versions of existing data, can also alleviate the need for excessively large datasets. Certain architectures, such as those based on convolutional neural networks (CNNs) for image classification, are inherently more data-efficient than others.

Smaller Datasets & Successful Applications

Successfully deploying deep learning models on smaller datasets is demonstrably possible. This is particularly true in specialized domains with limited data availability but a well-defined problem. Consider medical diagnosis, where obtaining large datasets might be ethically or logistically challenging. In these scenarios, careful data preprocessing, model selection, and regularization techniques are crucial to achieving satisfactory performance.

Myth 2: Deep Learning Models are Always "Black Boxes"

The assertion that deep learning models are always opaque and uninterpretable is a false oversimplification. While the internal workings of deep neural networks can be complex, making them difficult to fully understand, strides are being made to increase their transparency and interpretability.

The Reality: Methods for Understanding Deep Learning Models

Several techniques help shed light on the decision-making processes of deep learning models:

- Feature visualization: This technique helps visualize the features learned by different layers of the network, providing insights into what aspects of the input data the model is focusing on.

- Saliency maps: These highlight the parts of the input data that are most influential in the model's prediction, providing a degree of explainability.

- Layer-wise relevance propagation (LRP): This method propagates the prediction back through the network to identify which input features contributed most to the final outcome.

- Attention mechanisms: These mechanisms allow the model to focus on specific parts of the input data, making the decision-making process more transparent.

These methods, while not providing a complete understanding, offer valuable insights into how deep learning models arrive at their predictions, reducing the "black box" problem.

Myth 3: Deep Learning Solves Every Problem

The belief that deep learning is a panacea for all machine learning problems is demonstrably false. Deep learning is a powerful tool, but it's not universally applicable.

The Reality: Limitations of Deep Learning

Deep learning faces several limitations:

- Data dependency: Deep learning models require large amounts of high-quality data for optimal performance. In scenarios with limited data, other machine learning techniques might be more suitable.

- Computational cost: Training deep learning models can be computationally expensive, requiring significant computing power and time.

- Interpretability challenges: As discussed earlier, understanding the decision-making process of deep learning models can be challenging. This is a major concern in applications where explainability is crucial, such as medical diagnosis or financial modeling.

- Overfitting: Deep learning models, with their high capacity, are prone to overfitting, where they learn the training data too well and perform poorly on unseen data.

- Lack of generalizability: A deep learning model trained on one specific dataset may not generalize well to other datasets, even if the datasets represent similar phenomena.

Choosing the right algorithm depends on the specific problem, available data, computational resources, and the need for interpretability. Traditional machine learning techniques often offer simpler, more efficient, and more interpretable solutions for certain tasks.

Myth 4: Deep Learning Requires Specialized Hardware

While it's true that deep learning benefits significantly from specialized hardware like GPUs and TPUs, the statement that it requires such hardware is false.

The Reality: Deep Learning on Standard Hardware

While specialized hardware accelerates training and inference significantly, deep learning can be performed on standard CPUs, albeit at a slower pace. This is particularly true for smaller models and less computationally intensive tasks. Cloud computing resources also offer scalable options, allowing researchers and developers with limited access to specialized hardware to still utilize deep learning techniques.

The choice of hardware depends on the size of the model, the dataset, and the desired training/inference speed. For smaller-scale projects or experimentation, standard hardware might be sufficient, making deep learning more accessible.

Myth 5: Deep Learning is Only for Experts

The assertion that only experts can implement and utilize deep learning is false. While mastering the theoretical underpinnings of deep learning requires significant expertise, utilizing pre-trained models and user-friendly frameworks has made deep learning more accessible to non-experts.

The Reality: Accessible Deep Learning Frameworks

Frameworks like TensorFlow, PyTorch, and Keras offer high-level APIs that simplify the process of building and deploying deep learning models. These frameworks abstract away much of the low-level complexity, allowing users with less deep learning expertise to leverage the power of deep learning. Moreover, the availability of pre-trained models eliminates the need for extensive data collection and training from scratch.

Myth 6: Deep Learning Always Outperforms Other Machine Learning Techniques

The statement that deep learning invariably surpasses other machine learning techniques is false. The optimal machine learning approach depends on the specific problem and dataset.

The Reality: Comparative Performance

While deep learning excels in certain areas like image recognition and natural language processing, it doesn't automatically outperform other methods in all contexts. Simpler models, like linear regression or support vector machines (SVMs), may be more appropriate for smaller datasets or problems where interpretability is paramount. Ensemble methods, which combine multiple models, often achieve higher accuracy than single deep learning models. The best approach often involves experimentation and comparison of various techniques.

Myth 7: Deep Learning is a Solved Field

The idea that deep learning is a fully mature and completely understood field is definitively false. Deep learning is a constantly evolving field with ongoing research and development.

The Reality: Active Research and Development

Active research areas include:

- Improved model architectures: Researchers are continually developing new and more efficient model architectures.

- Enhanced training techniques: New training methods are being developed to improve the efficiency and accuracy of deep learning models.

- Explainable AI (XAI): Significant effort is devoted to improving the interpretability of deep learning models.

- Addressing bias and fairness: Researchers are actively working to mitigate bias and ensure fairness in deep learning models.

- Generalization capabilities: Improving the ability of deep learning models to generalize to unseen data remains a key area of research.

These ongoing advancements ensure that deep learning continues to progress, offering improved performance and capabilities in the future.

Conclusion: A Balanced Perspective on Deep Learning

Deep learning is a powerful and transformative technology, but it's crucial to have a balanced understanding of its capabilities and limitations. By dispelling common myths and appreciating the nuances of this field, we can better utilize its potential while avoiding unrealistic expectations. The future of deep learning lies not just in its continued advancement, but also in responsible and informed application, ensuring its benefits are harnessed effectively across various domains. Understanding its limitations is as critical as understanding its strengths for successful and ethical implementation.

Latest Posts

Latest Posts

-

A Partial Bath Includes Washing A Residents

May 12, 2025

-

Which Of The Following Describes A Net Lease

May 12, 2025

-

Nurse Logic 2 0 Knowledge And Clinical Judgment

May 12, 2025

-

Panic Disorder Is Characterized By All Of The Following Except

May 12, 2025

-

Positive Individual Traits Can Be Taught A True B False

May 12, 2025

Related Post

Thank you for visiting our website which covers about Which Of The Following Is Not True About Deep Learning . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.